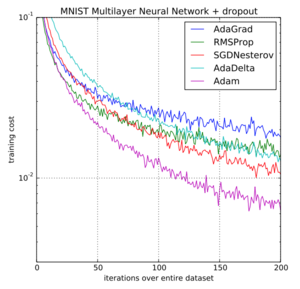

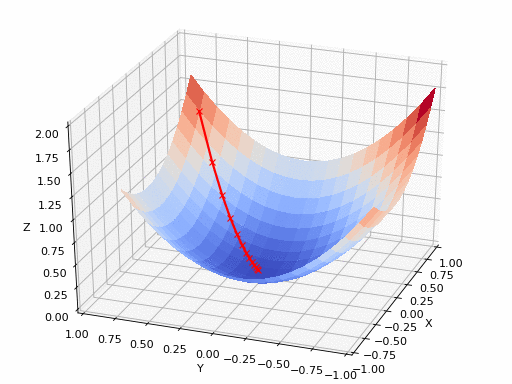

Gentle Introduction to the Adam Optimization Algorithm for Deep Learning - MachineLearningMastery.com

Gentle Introduction to the Adam Optimization Algorithm for Deep Learning - MachineLearningMastery.com

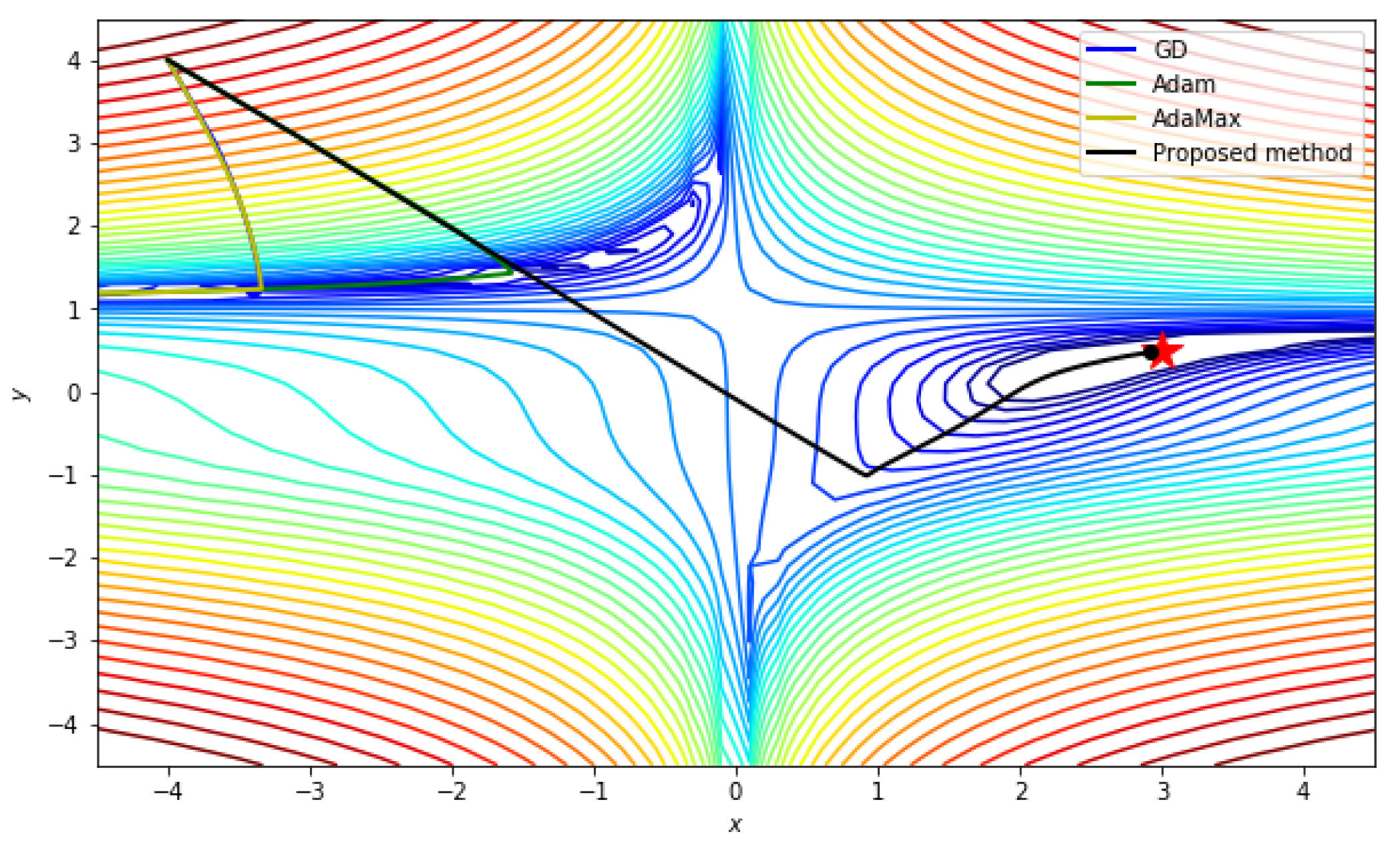

Applied Sciences | Free Full-Text | An Effective Optimization Method for Machine Learning Based on ADAM

![PDF] Transformer Quality in Linear Time | Semantic Scholar PDF] Transformer Quality in Linear Time | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/dc0102a51a9d33e104a4a3808a18cf17f057228c/13-Table7-1.png)